How Planning Agents in AI Are Turning Goals Into Action

You’ve probably seen this play out: you prompt an AI to “book a meeting, draft the agenda, and send reminders.” It does one of those, maybe two, before going off-script or asking you to “clarify.” Smart? Sure. Goal-oriented? Not even close!

This is exactly the limitation with most AI today; they respond in the moment but lose direction over time.

Enter planning agent in AI: the decision-making engine that breaks down a goal, charts the steps to reach it, and adjusts when things change. From self-driving vehicles to scheduling tools, planning agents are what make AI systems capable of seeing the bigger picture and following through.

This piece unpacks how these agents work and what sets them apart from reactive models. We'll also explore case studies, giving you a direction to integrate these goal-based intelligent agents into your workflow.

What is Planning Agent in AI?

A Planning Agent in AI is an intelligent system that figures out the best sequence of actions to reach a goal. It thinks ahead, makes a plan, and then follows it to complete a task just like how you plan your day step by step to get everything done.

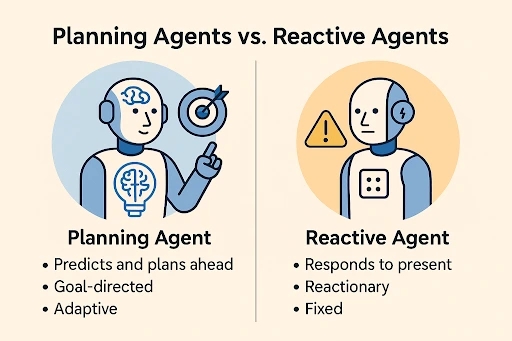

Planning agents vs. reactive agents

Most AI tools today are great at reacting. You ask, they respond. But when you give them a goal like “organise a review before Friday,” they stall. Maybe they suggest a few slots or ask for more info, but they rarely follow through. That’s the difference between reactive systems and planning agents.

Reactive agents respond to triggers: “If X happens, do Y.” They’re fast, simple, and useful for one-off decisions, like flagging a spam email or auto-suggesting a reply. But they lack memory, foresight, and multi-step execution.

Planning agents, on the other hand, operate with intent. They break goals into smaller tasks, decide what needs to happen first, evaluate trade-offs, and adapt when things change. Instead of reacting to each command, they own the process end-to-end. This is also what powers real-world AI, such as workflow automation and intelligent scheduling.

How planning agents work: The internal loop

Planning agents operate through a continuous loop: perceive → evaluate → plan → act. Each part of this loop plays a role in helping the agent stay aligned with its goal and adapt as needed.

Let’s break down what happens at each stage:

-

Perception and input handling: The agent gathers information from its environment. This could be a user command, data from an API, or sensor inputs. It filters noise and builds a usable picture of the current state.

-

Planning and task decomposition: Once the goal is clear, the agent breaks it down into smaller actions, identifies what needs to happen first, and in what sequence.

-

Memory: Short-term memory tracks context (like a recent user prompt), while long-term memory stores useful data or patterns from the past. This helps the agent maintain continuity and avoid repeating steps.

-

Reasoning and decision-making: The agent weighs different options and selects the next step based on logic, past behaviour, or goal alignment. This might involve rule-based reasoning, where decisions follow fixed logic like 'if X, then Y', or more advanced techniques like utility-driven AI planning, where actions are compared based on expected outcomes, efficiency, or impact.

-

Action and tool calling: Once a decision is made, the agent carries it out. This could mean calling an API, triggering a device, or updating a system. Tool calling is the mechanism that allows the agent to interact with external software, databases, or services, bridging the gap between internal logic and external execution.

How planning agents fit into goal-directed workflows

68% of organisations[1] say that their mission-critical processes become harder to manage as more tasks get automated. To do this, AI planning agents rely on a mix of techniques that help them stay aligned with their goals, even when the ground shifts.

Here's what they are:

STRIPS-based agent planning

Short for “Stanford Research Institute Problem Solver," STRIPS is a classic model used in automated task planning. Every action an agent takes has defined “before” and “after” conditions, almost like a checklist. It starts with the current state, checks which actions are valid, and applies them step-by-step to get closer to the goal.

STRIPS is more about logical progression: if the floor is dirty, clean it; if it's clean, move to the next one. There is zero guesswork, only systematic execution.

Heuristic planning in AI

Instead of checking every possible option, the agent uses heuristics, shortcuts based on experience or rules of thumb, to estimate the most efficient path. This can be useful in scenarios with many variables, like logistics or scheduling.

A warehouse robot, for instance, might prioritise the closest pickup based on heuristic planning in AI, skipping the rest.

Utility-driven AI planning

Each action is scored based on how useful it is, and the agent picks the one with the highest value. These scores can reflect expected outcomes, efficiency, cost, or alignment with broader goals.

This technique is especially useful when multiple valid options exist, but some are clearly more effective or resource-friendly than others. An example would be a customer support agent choosing between escalating a case or resolving it with a knowledge base article, based on past success rates.

Partial-order and probabilistic planning

Not every task follows a strict to-do list. Partial-order planning gives agents the flexibility to decide which steps can happen when, especially if some actions aren’t dependent on others.

It’s useful in scenarios like warehouse picking or software setup, where certain tasks can run in parallel or out of order to save time and reduce delays.

Probabilistic planning, on the other hand, prepares the agent for uncertainty. When outcomes aren’t guaranteed, like whether a tool will respond or a route stays clear, the agent builds fallback options to stay on track.

Think of a disaster response bot that not only plans the fastest evacuation route but also maps backup paths in case conditions suddenly change.

AI agent decision trees

The agent moves through a branching flow, where each step depends on previous choices or outcomes. Often used in troubleshooting, onboarding, or adaptive workflows where logic needs to change on the fly.

A good example is a tech support bot navigating through a diagnostic flow based on user inputs and prior issues.

Multi-agent planning systems

In a system like this, multiple agents collaborate, dividing tasks, syncing updates, and adjusting plans as a team.

Common in logistics, robotics, and real-time games, these agents work toward shared goals without stepping on each other’s toes. Picture a fleet of drones: one reads traffic, another checks the weather, and a third reroutes to avoid collisions. Together, they behave not as individuals but as a unified, goal-based intelligent agent network.

Applications of planning agents in AI systems

By 2025, 60% of U.S. enterprises[2] are expected to adopt AI planning agents to streamline decision-making and automate complex workflows. These systems are no longer niche; they’re powering tools you probably already use or rely on.

Here's what that looks like in the real world:

Robotics and navigation

Autonomous robots, like the ones Amazon uses in its warehouses, don’t just follow lines on the floor. Planning agents help them calculate optimal routes, avoid obstacles, and get from point A to B even as conditions shift. Bosch also uses planning agents to safely guide factory-floor robots in live production environments.

Game AI and virtual agents

If you’ve ever played a game where non-player characters seem to learn or outsmart you, that’s often thanks to multi-agent planning systems. While you're focused on breaking through defences, one agent is already rerouting supplies, another is building reinforcements, and a third is adjusting attack timing based on your strategy.

Together, they act as a coordinated team behind the scenes, making the game smarter and more unpredictable. Frameworks like GameVLM are making this coordination even more advanced in today’s AI-powered games.

Autonomous workflow orchestration

Help desk tickets don’t magically resolve themselves, but to the user, it’s starting to feel that way. Platforms use automated task planning powered by planning agents to evaluate context, assign priorities, and carry out coordinated workflows. This is the benefit of autonomous workflow orchestration!

This kind of behind-the-scenes orchestration has helped reduce resolution times by 52%[3], without needing human intervention at every step.

Smart assistants and scheduling

When Google Assistant reschedules your meeting or Calendly juggles multiple calendars without breaking a sweat, that’s a goal-based intelligent agent at work. These assistants don’t just respond, they follow through: checking availability, managing conflicts, and sending reminders without needing micromanagement.

From smart assistants to robotics, AI planning agents are quietly powering systems that think ahead. As adoption grows, they'll move from support roles to core drivers of how work gets done.

And for businesses? That means less micromanagement, fewer dropped balls, and a lot more done with less.

4 challenges faced by planning agents

Planning agents are powerful, but they’re not invincible. Like any intelligent system, they run into real limitations that can affect performance and consistency. Knowing where these cracks show up helps you design more robust systems in the real world.

Let’s break down what can go wrong and what you can do about it:

1. Real-time decision constraints

In fast-paced environments, AI planning agents can slow things down. These systems need time to think about evaluating options, predicting outcomes, and planning the next steps. But in situations like warehouse automation or autonomous driving, delays, even of a second, can disrupt everything around them.

That’s why many systems also use reactive agents: a simpler type of AI that responds instantly to changes without long-term planning. For example, if a robot sees an obstacle, the reactive layer might swerve immediately, while the planning agent figures out the bigger reroute. This combination helps balance speed and strategy, so the system stays both responsive and goal-focused.

2. Handling uncertainty and dynamic environments

Ever tried booking a meeting room online, only to find it was taken by the time you hit "confirm"? Planning agents in AI face similar unpredictability. They build a plan assuming the world won’t change, but the world often does. APIs go down, data gets updated, or dependencies vanish mid-task.

To navigate this, agents need the ability to adapt mid-process. Techniques like heuristic planning in AI help the agent choose a smart path from the start, using experience-based shortcuts instead of testing every possibility.

But when things shift after a plan is already in motion, it’s dynamic replanning that takes over. The agent revisits its goal, re-evaluates the current state, and creates a new plan in response to what just changed. Without this flexibility, even the best-laid plans fall apart in real-world use.

3. Resource optimisation and scalability

Planning agents can only juggle so much at once. Just think of trying to coordinate a 10-step onboarding flow while the system is low on memory, API calls are limited, and new requests keep coming in. Even a goal-based intelligent agent will eventually stall if it’s stretched too thin.

One solution? Use multi-agent planning systems, where multiple agents divide and conquer. While one handles scheduling, another can manage document verification or follow-ups. It’s like having a team instead of one overworked assistant, making the whole system more scalable and resilient under pressure.

4. Alignment and intent mismatch

Say you ask an AI to “optimise meeting time,” and it books everyone for 7 A.M. because, technically, that’s when everyone’s free. It’s not wrong, but it’s not right either. This kind of mismatch happens when goal-based intelligent agents interpret success purely through logic, without understanding context, preferences, or intent.

To reduce this, systems need clearer utility-driven AI planning frameworks that define success not just as completion, but as satisfaction.

Feedback loops that let the agent learn from past results, preference memory to remember user likes and dislikes, and alignment layers that help ensure its actions match real-world expectations, not just logic.

What’s next for planning agents in AI systems?

As AI matures, planning agents in AI are evolving into more autonomous, self-aware systems. The next wave is already here: we’re seeing hierarchical planners that can break down mega-goals into sub-goals. There are self-evaluation mechanisms that allow agents to spot their own mistakes, and cheaper, faster inference that makes all this scalable across workflows.

Expect AI planning agents to get sharper at nuance: choosing not just the next step, but the right one based on intent, trade-offs, and constraints. Think fewer rigid flows, more intelligent delegation.

The bottom line? Goal-based intelligent agents are moving from back-end automation to front-line decision-makers.

At GrowthJockey, we help businesses from enterprise teams to build, scale, and integrate these agent-led systems end to end. From planning architecture to tool orchestration, we offer tailored AI and ML solutions that unlock intelligent performance at every stage of growth.

FAQs on planning agent in AI

1. Where are AI planning agents used in the real world?

You will find AI planning agents in autonomous robots that optimise pick paths, in smart assistants that juggle multi-calendar meetings, and in logistics platforms that reroute fleets when traffic changes. Multi-agent planning systems even coordinate disaster-response drones, proving that intelligent action sequencing can scale from desktop apps to mission-critical operations.

2. Which techniques power automated task planning in AI?

Common methods include STRIPS-based agent planning for logical progression, heuristic planning for quick path estimates, and utility-driven AI planning that scores each action for value.

Advanced deployments blend decision trees with probabilistic models so agents handle uncertainty. Together, these tools fuel the automated task planning that turns objectives into real-time execution.

3. How does automated task planning scale in multi-agent environments?

In multi-agent planning systems, each agent owns a slice of the workload, inventory checks, route mapping, or customer updates, while a coordination layer syncs shared state. Agents publish progress, subscribe to others’ outputs, and re-plan locally when inputs shift. This divide-and-conquer model lets automated task planning handle thousands of parallel decisions without central bottlenecks.

4. Why is utility-driven AI planning important for business workflows?

Utility-driven AI planning assigns a numeric “value” to every possible action. Tasks such as cost saved, time gained, risk reduced are assigned a value so the planning agent can pick the highest-ROI step at each turn.

In finance ops, for example, the agent might weigh early-payment discounts against cash-flow constraints, selecting the path that maximises net utility rather than merely finishing a task list.