How Agentic AI Memory Turns Simple Bots into Truly Intelligent Agents: Importance & Types

Today, agentic AI has rapidly evolved into the next big thing for business innovation. Just one factor behind its potential would be memory. While in humans, memory is the source of decision-making, learning, and adaptation, it also strengthens and shapes the intelligence of autonomous AI agents.

Agentic AI memory operates as a structured system with different layers, each serving a unique purpose, influencing an agent's ability to provide meaningful interactions over time.

Keep reading as we break down what memory means in these systems, explore its different types, and explain why it matters so much to business leaders and decision-makers.

What is Memory in Agentic AI?

Agentic AI is composed of autonomous systems that can set goals, plan activities, learn from their surroundings, and change over time. "Agentic" means that they are independent and can make hard choices, like a person doing a job.

Memory is the mechanism that allows agents in agentic systems to recall experiences, track progress, and apply knowledge in new circumstances. Without the memory in agentic systems, agents get reactive and cannot learn or sustain contextual understanding through time.

Importance of Memory in Agentic Systems

Memory is useful for more than just keeping data; it also gives AI agents the tools they need to operate with continuity for long periods of time. This is what makes agent-based systems stable and scalable in the real world.

Memory for context retention

Traditional AI often treats each interaction as isolated. Agentic task memory, on the other hand, lets an agent remember past states, human inputs, and goals so it can keep things going and give personalised responses.

This ability can be seen in a virtual assistant that remembers problems from the past or learns what the user likes. Context-aware AI memory makes such continuity possible.

Experience-based learning

Like humans, agentic systems grow more effective through experience. Through learning-based memory in AI, an agent learns from previous interactions, discovers patterns, and therefore can enhance future decision-making.

For AI handling logistics, the agent might detect that certain delivery routes lead to delays, so it can plan future routing to avoid those routes. This is beyond just storing data; it is memory with a structure accessible by the agent itself, allowing it to act more intelligently.

Did you know?

In 2025, Amazon introduced agentic AI into its U.S. logistics network, which caused delivery times to go down by 15% by optimising routes and inventories with the learning-based memory systems.

Enhancing long-term goal achievement

Sustained success is a phenomenon occurring beyond tactical win and strategic follow-through. Long-term memory enables AI agents to commit themselves to goals so far beyond the immediate session or input.

Complex activities such as strategic planning or product development always require a long-term perspective. Therefore, a system needs agentic long-term memory to pursue these objectives effectively.

The system thus allows the agent to set long-term objectives, break these objectives into steps, and modify the strategy in light of new information. It is this capacity that's needed for volatile environments that demand adaptability.

4 Types of Memory in Agentic AI

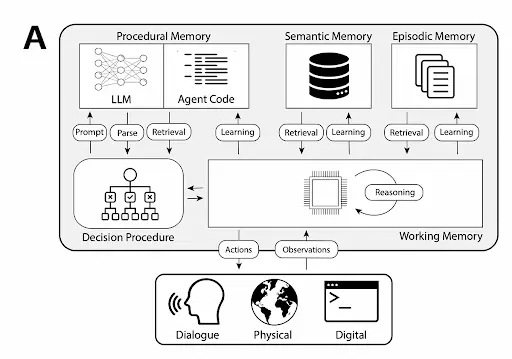

There isn't a single type of well-designed memory model that works for all Agentic AI systems. It is possible for the agent to interact intelligently with its surroundings, users, and goals because each type of memory serves a different purpose.

Let’s understand:

1. Short-term vs long-term memory

Short-term memory is the memory used for immediate tasks so that the agents can process current information and make fast decisions. Long-term memory contains all the knowledge and experiences that are accumulated, so it is used for learning and changing over time.

An AI writing assistant, for example, could use the short-term memory for the given paragraph, while past references of the user's tones and styles are found in the long-term memory.

2. Episodic memory

Episodic memory allows agents to remember specific experiences in context, enabling them to recognise patterns over time and adapt accordingly. It's the key to reflecting on what has happened and why.

Episodic memory in AI agents builds the idea that specific events or experiences occur, each having its context, action, and consequence.

Such memory thus allows agents to learn from past episodes, establish cause-and-effect relations, and apply learned lessons to other scenarios. For instance, in a business setting, this may translate into remembering the context under which a product launch failed so that the same mistakes are not repeated.

3. Semantic memory

General knowledge is a prerequisite for agents to operate in cross-domain activities. So, this semantic memory makes sure that there is a base level of shared understanding, just as an enterprise knowledge base might do.

Semantic memory is where one would need to put certain types of general knowledge about the world: facts, concepts, and relations.

So, operational systems with agents use semantic memory to reason within given domains and come up with correct decisions.

4. Procedural memory

Mastering routine work allows agents to obtain repeatable success. This is where procedural memory kicks in, allowing an agent to automate processes with precision and unvarying handling.

Procedural memory helps agents remember task performance. An AI agent has an internal architecture that allows it to store workflows and processes, much like a human might remember how to ride a bicycle, never having to relearn the action.

For instance, a reporting assistant may generate monthly analytics reporting after it has memorised the steps in the procedure, allowing for easier repetition of tasks.

How Agentic AI Uses Memory for Planning and Decision-Making

Good decision-making is not merely about data; it requires learning from the past and envisaging the future. Memory empowers the AI agent to connect intention to action using adaptive planning.

Memory-driven task sequencing

Multi-step tasks require sequencing and adaptation. An agent uses memory to monitor what has been done, foresee what must be done next, and adjust accordingly.

In financial forecasting, for example, an agent could change its planning depending on some historical indicators kept in memory. Agentic AI memory guarantees that each decision is taken with full consciousness of the larger process.

Learning from past interactions

The smartest systems evolve by learning from history to offer more relevant and refined support over time.

Every user interaction offers valuable insights. With memory, agents can identify common issues, recognise individual preferences, and evolve over time.

For instance, a scheduling assistant that recalls a CEO’s preference for afternoon meetings can proactively suggest suitable time slots. This ability stems from effective knowledge retention in AI.

Reinforcement through feedback loops

True intelligence is built through reflection. Feedback, when stored and interpreted effectively, allows agents to self-correct and improve.

Feedback plays a critical role in refining behaviour. Memory allows agents to store outcomes, assess effectiveness, and adapt strategies accordingly.

This learning loop is already redefining how brands plan and deploy campaigns. Discover how agentic AI reshapes social media strategy using real-time feedback and campaign memory.

Reinforcement learning is developed for feedback loops in which systems improve with repeated trials and errors. Memory-enhanced LLM agents use this framework to provide better results.

Technical Approaches to Agentic Memory Implementation

Behind every memory-aware agent is a carefully engineered architecture. Understanding the technology powering memory is essential to scaling and deploying intelligent systems.

Memory modules in LLM architectures

The addition of a memory module makes a language model into an interactive agent. It supports the possibility of preserving conversations and context beyond single prompts given to them.

Large Language Models (LLMs) like GPT-4 do not inherently possess memory. To make them agentic, memory modules are integrated into the architecture.

These modules store previous interactions, retrieve relevant facts, and support coherent dialogue across extended sessions. This is what upgrades a basic LLM to a context-rich system capable of advanced, sustained engagement.

Retrieval-Augmented Generation (RAG)

RAG bridges the gap between generative intelligence and factual accuracy. It enables agents to reference external knowledge while maintaining conversational fluency.

Retrieval-augmented generation enhances generative models by connecting them to an external set of knowledge. Rather than relying entirely on their internal parameters, the system will actually retrieve information from a memory store.

This ensures access to very fresh and domain-specific data while eliminating inaccuracies. For example, a compliance assistant could look up the latest regulations in order to give correct advice.

Did you know?

ArXiv’s 2024 study of RAG-based chatbots showed a 45% improvement in factual accuracy and a 27% reduction in hallucinations compared to standard LLMs, demonstrating the power of integrating memory modules.

Memory graphs and vector stores

Relationships matter just as much as facts. Memory graphs or vector stores organize knowledge with a view toward flexible reasoning and contextual retrieval.

A memory graph maps out relationships between entities, allowing agents to reason about these connections. On the other hand, vector stores like FAISS or Pinecone provide an efficient way to index memory for searching.

Together, these tools make memory retrieval fast, scalable, and accurate, a massive advantage in enterprise settings where time and accuracy are of the essence.

Let GrowthJockey Power your Agentic AI with Memory that Matters

Agentic AI memory forms the backbone of intelligent autonomy it allows AI agents to retain context, learn from past experiences, and build long-term strategies. When memory is embedded into AI, the system evolves from being just a task-based tool into a strategic asset that grows with your business. GrowthJockey applies this principle by embedding AI and ML solutions into its digital services spanning data analytics, demand generation, and smart operations helping C-suite leaders drive smarter, more adaptive, and sustainable growth.

FAQs on Agentic AI Memory

1. What is agentic AI memory and why is it important?

Agentic AI memory refers to the structured, long-term data store that lets autonomous agents remember goals, past actions, and outcomes. By retaining context, these agents avoid repeat errors and deliver consistent, goal-based performance. Strong memory in agentic systems turns reactive chatbots into intelligent partners that learn, adapt, and plan over extended interactions.

2. How does episodic memory in AI agents differ from short-term storage?

Episodic memory in AI agents stores rich snapshots of specific events, such as who did what, when, and with what result, much like a human recalling a meeting. Short-term buffers hold immediate inputs only briefly.

Episodic memory supports learning-based decision-making, enabling agents to analyse past situations, recognise patterns, and refine future plans with greater accuracy.

3. What technologies enable dynamic memory architecture for AI planning?

Dynamic memory architecture combines vector databases, retrieval-augmented generation, and hierarchical memory graphs. These components let an agent tag, index, and rapidly fetch relevant data for intelligent action sequencing. When paired with policy networks, the setup yields agentic long-term memory that scales across tasks without sacrificing speed or recall fidelity.

4. How do memory-enhanced LLM agents improve task automation?

Memory-enhanced LLM agents log each step of a workflow, such as prompts, tool calls, outcomes, into an external store. On the next run, they pull that history to avoid redundant steps and adapt to new constraints. This agentic task memory supports real-time context awareness, raising completion rates and reducing human oversight in complex, multi-step automations.