HITL vs. Agentic AI: Who’s Really Driving the System?

As AI systems are getting smarter, many are starting to operate on their own by making decisions and learning along the way. It's exciting to see the rise of agentic AI, but it also brings new problems, like giving flawed reasoning and acting unpredictably.

That's why the human-in-the-loop (HITL) phenomenon is so important. Instead of leaving AI to work on its own, HITL involves humans at key points, such as reviewing outputs, guiding steps, or stepping in when needed. Imagine the difference between an AI that sends texts to customers on its own and one that writes them and waits for a human to approve them.

This blog talks about human-in-the-loop (HITL) vs. agentic AI and helps you understand these two methods by comparing them in real-life situations.

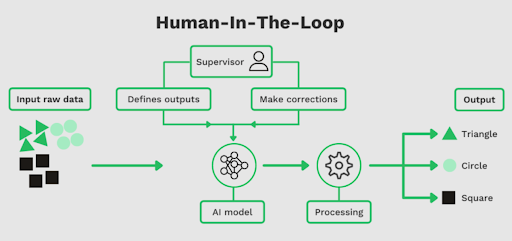

What is Human-in-the-Loop (HITL) in AI?

Human-in-the-loop (HITL) systems are an approach or method in which people help train and direct AI programs. Instead of allowing machines to learn on their own, humans step in at key moments to review, correct, and steer the system.

This is basically an AI-human collaboration, just like how a teacher helps a student understand new ideas by guiding them over and over again.

Here’s how HITL works in real life:

-

Supervised machine learning: Humans provide labelled data, like identifying objects in images or marking correct answers. This way, the AI model can learn faster and more accurately.

-

Ongoing feedback loop: Each time a human steps in to review or correct an AI’s decision, the system improves its understanding.

-

Unstructured learning support: HITL helps guide the AI by giving it context and clarity in unsupervised jobs where the data doesn't have labels.

-

Balancing strengths: HITL combines human judgment with machine efficiency to create more dependable outcomes.

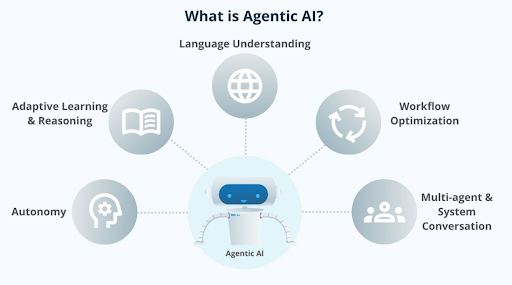

What is Agentic AI?

Agentic AI is a type of artificial intelligence designed to operate independently. While traditional AI follows set instructions, agentic AI makes decisions, learns from new data, and adapts its actions in real time to reach its goals. What makes it unique is that it can think and act on its own.

Agentic AI can handle multi-step complex tasks with the help of machine learning and large language models. For instance, it might oversee a supply chain, spotting issues and solving them without waiting for human input.

Here are the essential benefits of agentic AI:

-

Autonomous decision-making and real-time self-optimisation

-

Handling complex workflows involving many steps

-

Continuous learning and adapting to new data

-

Ability to work in fast-changing, unpredictable environments

Learn how different types of AI agents operate based on their intelligence level and interaction models.

Key Differences Between HITL and Agentic AI

It is important to know the difference between human-in-the-loop (HITL) vs agentic AI to understand the anatomy and scope of artificial intelligence as a whole. This comparison shows how each approach handles control, trust, and responsibility in very different ways.

| Aspect | Human-in-the-Loop (HITL) | Agentic AI |

|---|---|---|

| Control | Humans stay actively involved, reviewing and guiding AI decisions. | AI operates mostly independently, making decisions on its own. |

| Reliability | Relies on human expertise to handle tricky or unclear situations. | Aims for full autonomy but can struggle with unpredictable cases. |

| Ethical oversight | Humans ensure fairness, accountability, and legal compliance. | Faces challenges with transparency and responsibility. |

| Risk management | Real-time human intervention helps prevent mistakes or harm. | Decisions can be opaque, increasing the risk of unintended outcomes. |

| Trust and adoption | Builds greater user trust through transparency and control. | Can cause hesitation due to lack of human oversight. |

| Use cases | Ideal where safety and ethics are critical, like healthcare or finance. | Suited for tasks requiring rapid, complex autonomous action. |

Advantages of HITL

To stay ahead in the competition, businesses want the speed of automation without losing the human touch. Human-in-the-loop systems do just that. They combine the power of AI with human judgment to make choices that are smarter and safer.

Here are the top benefits of incorporating the HITL approach to your business for smarter decision-making:

-

Better accuracy and quality

HITL ensures that humans can step in when AI hits a grey area, like when data formats are confusing or ambiguous. This means that mistakes are less likely to happen, and the results are better.

-

Continuous learning

In supervised machine learning, human feedback helps AI models improve over time. For systems, this means they stay sharp and can quickly learn new things.

-

Real-time governance

Businesses can actively watch and guide AI outputs with HITL, preventing poor decisions before they happen. Plus, it's a safety net for when things are extremely important or sensitive.

-

Supports complex problem-solving

Where creativity or context is needed, human involvement adds depth and flexibility, something AI alone can’t always provide.

Advantages of Agentic AI

Global businesses are more inclined towards adopting autonomous AI agents to make their operations run more smoothly and better.

In fact, 29% of businesses are already using it, and another 44% plan to start using it within the next year. The main reasons for this are to save money, give better customer service, and require less human input.

Here is how:

-

Takes over complex tasks

Unlike traditional automation, agentic AI can handle complex workflows like supply chains and customer service without needing step-by-step input.

-

Adapts and learns on the go

These systems use agentic decision-making to learn from feedback and make smarter decisions over time. That means fewer errors and more efficiency.

-

Scales with your business

As workloads grow, scalable automation systems powered by agentic AI adjust without extra upgrades or staff, which makes growth easier.

-

Frees up human potential

Agentic AI takes care of boring and repetitive jobs so people can focus on being creative and performing problem-solving tasks that need a human touch.

When to Use HITL vs Agentic AI

Deciding between human-in-the-loop (HITL) vs agentic AI depends largely on the nature of the task, the level of risk involved, and the need for oversight.

HITL works great when human supervision is critical

This includes tasks with unclear instructions, ethical concerns, or high-stakes consequences.

In healthcare, AI can help with diagnosis, but a human should always make the final call. In the same way, real-time human intervention in military applications makes sure that choices are made in a way that is responsible and ethical.

In these cases, a strong AI-human collaboration is important, with humans making decisions and machines handling the data.

You should choose HITL when:

-

The outcome directly impacts human lives or legal rights

-

There’s a need for transparency or traceability

-

The environment is unpredictable or sensitive

Autonomous AI agents work best where tasks are repetitive and rules are well-defined

In logistics, for instance, AI agents can route shipments in real time. In finance, they can find fraud in millions of deals much faster than a human could. In this case, autonomy boosts output and lowers the need for constant control.

You can use agentic AI when:

-

Tasks require fast, consistent execution

-

Human involvement adds unnecessary delay

-

The system can learn and adapt without direct input

Real-World Comparison: Can HITL and Agentic AI Work Together?

A lot of the time, HITL and agentic AI work together to make a system that is balanced and uses both strengths.

AI often takes care of task-level autonomy, which means it makes choices and completes tasks on its own within a certain range. When scaled, these hybrid models can evolve into systems that use multi-agent architecture for distributed task execution.Meanwhile, humans oversee the process, stepping in when complex judgment or ethical considerations are required.

Some examples of such collaboration would be:

-

Autonomous Vehicles: AI handles driving tasks like staying in the right lane and controlling speed, but human drivers step in when something unexpected happens.

-

Healthcare: In healthcare, AI helps with diagnosis and routine data analysis while doctors look over treatment choices and make sure they are correct.

-

Defence and Security: AI watches data feeds and flags threats on its own, but humans make the final decisions about what to do with sensitive operations.

If you're exploring ways to build AI agents that support hybrid autonomy and human governance, here’s a useful starting point.

Future of Intelligent Systems: HITL vs. Agentic AI

The future of intelligent systems depends on finding the right balance between human-in-the-loop (HITL) vs. agentic AI. As AI gets smarter and more independent, agentic AI can decide what to do and do it on its own. However, human oversight is still needed to ensure safety, ethics, and responsibility are met.

Why HITL still matters:

-

Safety and Ethics: HITL is vital in sensitive fields like healthcare and autonomous driving, where mistakes can be costly.

-

Accountability: Humans ensure that AI decisions align with social and legal standards.

Rise of agentic AI

-

Autonomy: Agentic AI systems can perform tasks without constant human input.

-

Efficiency: They excel in handling large-scale, routine operations quickly and reliably.

Hybrid models on the rise

-

Combining HITL oversight with agentic AI’s autonomy creates systems that are both smart and trustworthy.

-

Humans intervene only when AI confidence is low or ethical concerns arise.

Conclusion on Human in the Loop (HITL) vs. Agentic AI

Human-in-the-loop (HITL) vs agentic AI are two distinct ideas but complement each other as AI technologies. Agentic AI gives tasks autonomy and efficient, scalable automation, while HITL puts safety, ethics, and real-time AI government under the control of humans.

Modern intelligent systems need to know whether to use human-in-the-loop systems or fully autonomous AI agents to balance control, trust, and performance.

At GrowthJockey, we integrate customised AI solutions that blend the best of both worlds to assist businesses in navigating this changing landscape.

Partner with GrowthJockey today to harness the power of intelligent systems and drive your business forward with confidence.

HITL vs Agentic AI FAQs

1. What is the difference between AI agent and agentic AI?

The difference lies in the fact that AI agents perform specialised tasks solo, while agentic AI may choose to act freely in carrying out complex objectives.

2. What is agentic AI IBM?

Agentic AI at IBM is an intelligent system designed for working autonomously, making decisions and learning in a continual fashion with slight assistance from human beings for business purposes.

3. What is human-in-the-loop for AI agents?

Human-in-the-loop means that humans actively guide, review, or correct decisions made by AI agents, ensuring the accuracy, ethical standards, and safety of AI-driven processes.

4. What is the main purpose of applying a human-in-the-loop approach when using AI?

The main goal is to combine human judgment with AI's speed, which would provide a mechanism for control, reduce errors, and deal with moral or complicated issues unsuitable for AI working on its own.