How Agentic AI with LangChain is Transforming Industries

AI is no longer a futuristic concept. It’s now part of boardroom discussions, product strategies, and investor expectations.

But while the idea of AI is easy to buy into, operationalising it is something else entirely.

For most businesses, the real challenge isn’t deciding whether to adopt AI. It’s figuring out how to integrate it into messy, multi-system environments without a dedicated machine learning team or a custom-built stack.

And this is where the adoption stalls.

LangChain is an open-source AI framework designed specifically to bridge the gap between ambition and execution with agentic AI.

It allows businesses to take powerful Large Language Models (LLMs) and turn them into operational agents - tools that connect to live data, call APIs, make decisions, and take action.

In short, LangChain brings structure, reasoning, and autonomy to AI applications without requiring deep ML expertise or custom infrastructure.

In the rest of this blog, we’ll explore how LangChain models are being deployed across industries. Let’s dive in.

What is LangChain? Understand with a simple example

Before jumping into setup or code, it’s worth stepping back to ask a simple question:

What does LangChain actually do, and why does it matter?

At a glance, it’s easy to assume LangChain is just another AI tool. But that’s not quite right.

To understand its value, start with what most people already know: How a language model like GPT-3.5 typically works.

You ask a question, and the model responds. That’s the standard interaction - text in, text out.

There is no memory, no awareness of previous steps, no ability to fetch live data, call an API, or complete a task in your system.

It’s powerful but isolated. This is exactly where LangChain fits in.

LangChain takes that same model and gives it structure, memory, logic, and access to external tools.

So instead of answering one question at a time, your application can now:

-

Hold a multi-turn conversation

-

Reference internal documents or external sources

-

Trigger tools, run calculations, or query a database

-

Handle decision-making across a sequence of steps

LangChain turns a passive language model into an active, context-aware agent.

LangChain tutorial: Get started without being an AI expert

Getting started with LangChain is easier than you might think, and you don’t need to be an AI expert to start. Whether you're building your first AI project or simply exploring the world of LangChain models, this framework makes it simple to get up and running.

Follow these easy steps to set up LangChain in no time (no matter what industry you are in) and start creating powerful applications.

1. Set up your Python environment the easy way

Before installing LangChain, you’ll want to create a separate Python environment.

This helps avoid conflicts between different projects or package versions. Think of it as setting up a clean workspace, so tools for one project don’t interfere with another.

We’ll use Conda, a popular environment manager that makes this process simple and reliable.

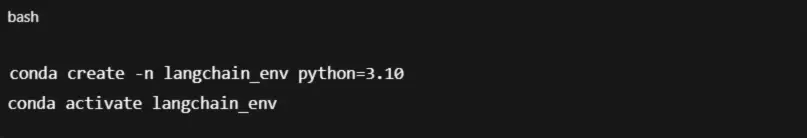

Do this:

This creates a new environment named langchain_env and activates it.

Know this:

A Python environment is like a sandbox. It keeps all the libraries and packages isolated to one project, so updates or installs don’t affect anything else on your system.

Did you know?

Conda is a tool that helps you manage environments and install packages easily. It’s widely used in data science, machine learning, and AI workflows because of its flexibility and speed.

2. Install the tools that make LangChain work

With your environment ready, it’s time to bring in the core tools you’ll need to start working with LangChain.

LangChain acts as a connector between LLMs and other components like APIs, files, or databases. So you’ll need a few key libraries to enable that functionality.

Here’s what to install and why.

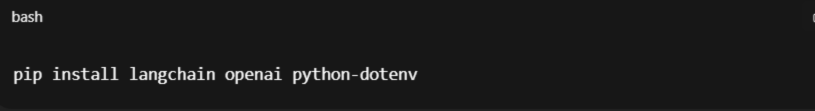

Run this in your terminal:

Let’s break down what each of these does:

- langchain

The main framework. This gives you access to LangChain's modules like chains, agents, tools, memory, and retrieval.

- openai

Required if you're using GPT-3.5, GPT-4, or any other OpenAI model. LangChain doesn’t come with models, it connects to them.

- python-dotenv

Helps you securely store and load environment variables (like your API key) from a .env file. This keeps sensitive data out of your main codebase.

If you’re planning to build apps that need to store, search, or retrieve text, like:

-

Document Q&A

-

AI chat with memory

-

Custom knowledge assistants

Install ChromaDB, a lightweight, open-source vector database that works well with LangChain.

Know this:

LangChain doesn’t generate answers on its own. It utilises LLMs (such as GPT) to process prompts, but can also integrate with tools, files, APIs, and data sources to generate more accurate, real-world responses.

Did you know?

LangChain supports many backends for vector storage, like Pinecone, Weaviate, Chroma, FAISS, and others. For beginners, ChromaDB is often the easiest way to get started locally.

3. Add your API key safely (no leaks allowed)

LangChain connects to language models through an API. For most beginners, that model is GPT-3.5 / GPT-4 served by OpenAI.

You need to give your application the key without hard-coding it in public code. The safest approach is a hidden .env file.

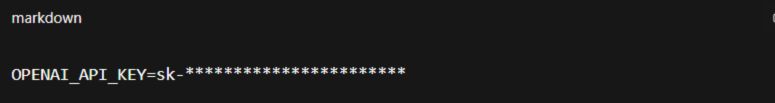

- Create the file inside your project folder:

- Paste your key (copied from the OpenAI dashboard) into the file:

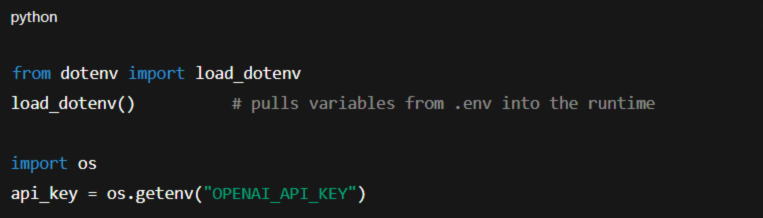

- Load the key in Python so LangChain can see it:

You should never print the key or push .env to GitHub. Make sure you add .env to .gitignore so it stays local.

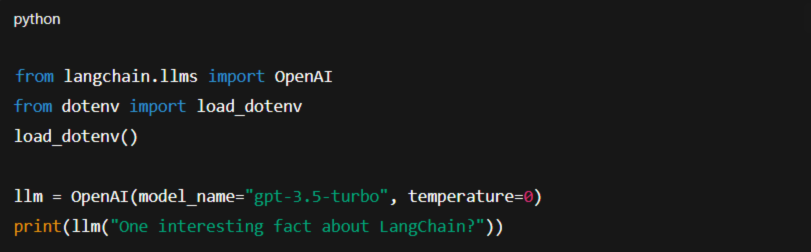

Here’s a quick sanity test for you:

- Create a file quick_test.py:

- Run:

A response in your terminal confirms the LangChain framework is talking to OpenAI successfully.

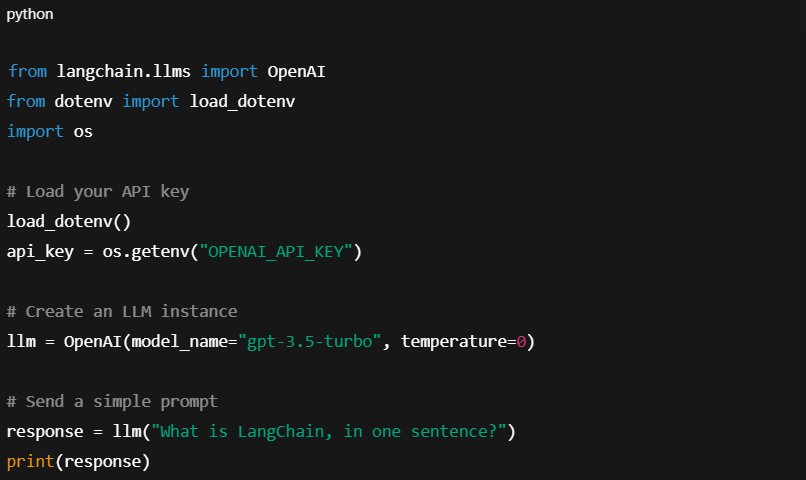

4. Run a quick test to see it in action

Let’s walk through a basic example of how LangChain actually works.

At its core, LangChain helps you build a chain of actions. Starting with a user prompt, passing that to a language model (like GPT-3.5), and optionally doing more with the output (like saving it, running tools, etc.).

This first example focuses on the simplest chain:

Send a prompt → get a response.

Now let’s create a new Python script: simple_chain.py

Then run:

You should get a clean, readable response from the model, served through LangChain. You just created your first LangChain-powered application.

Where LangChain is already making a difference

Let’s explore how LangChain is making a difference in real-world projects. By making AI applications smarter and more adaptable, LangChain is revolutionising industries.

Here are some exciting use cases to explore!

1. Customer support automation

Most customer support bots follow a script.

They’re good at answering FAQs, but the moment a question falls outside the flowchart, the experience breaks. This is where LangChain makes a measurable difference.

By combining LLMs with real-time access to CRMs, databases, and internal documents, a LangChain agent looks up the right data and responds with context.

For example, Cisco deployed Langchain, LangSmith, and LangGraph to enhance their support operations. The result? They now automate 60% of nearly 1.8 million support cases, using AI-driven agents that handle context-rich customer conversations and system integration.

2. Personalised recommendations in e-commerce

In e-commerce, relevance isn’t nice to have; it’s expected. But most recommendation systems rely on basic rules (“customers who bought X also bought Y”), which leads to generic, uninspired suggestions.

With Langchain, you can build agents that tailor suggestions to individual users in real time by combining behaviour data, product catalogues, and context-aware reasoning enhancing personalized customer experiences in shopping beyond generic recommendation systems.

Here’s what the data says:

By 2025, the global AI-enabled e-commerce market is projected to reach $8.65 billion, up from $7.57 billion in 2024, and is expected to more than double to $17.1 billion by 2030.

3. Document and knowledge management

Managing large document collections like contracts, reports, or compliance files can quickly become a bottleneck. Searching through PDFs, parsing nuanced language, and summarising key clauses is time-consuming and prone to errors.

LangChain simplifies this by handling the process end-to-end. It can read a wide range of file types, such as PDFs, Word docs, and web pages, and splits them into smaller, structured chunks that are easier to work with. These chunks are stored in vector databases, allowing for fast and accurate semantic search.

When a user asks a question, LangChain pulls only the most relevant information into the prompt. That means responses are not just quick but also grounded in the right context.

4. AI-Powered data analysis and reporting

Large datasets and frequent reporting cycles can slow decisions and stretch teams thin. Langchain agents solve this by automating the entire pipeline: querying databases, analysing results, visualising trends, and generating summaries.

Take MUFG Bank as an example. Before using LangChain, analysts spent hours digging through data, writing queries, and putting together presentations. The whole process was slow and scattered.

Now, one LangChain agent can do it all in seconds - fetch data, build visuals, and generate insights. The result? A 10× boost in research efficiency and a lot more time saved for the team.

Read more on how Agentic AI in Data Analytics powers similar data querying, reporting, and insights automation across industries.

Is LangChain free? Here’s what you’ll pay (and won’t)

The LangChain framework is completely free to use.

As it’s an open-source project, there are no licensing fees, no premium tiers, and no paywalls. You can clone the code, modify it, deploy it, or build on top of it - at zero cost.

All of LangChain's core features are available for free, from its advanced agentic AI tools to the ability to integrate multiple language models (LLMs) and connect with external APIs.

That makes it an ideal starting point for:

-

Solo developers experimenting with LLM agents

-

Startups building MVPs

-

Enterprises testing AI workflows at scale

However, keep in mind:

-

LangChain requires third-party services, such as OpenAI or Hugging Face, to provide access to LLMs and external APIs.

-

Be aware that these services may incur usage fees, which will vary based on the models you choose and the scale of your project.

-

As an example, getting access to OpenAI’s GPT models requires an API key, and their pricing is linked to the volume of tokens processed.

-

LangChain itself remains free and open-source, and you can use it to connect to many free or low-cost LLMs and tools as well.

You’ll only pay for the external APIs and services you use, making it a highly cost-effective choice for businesses and developers alike.

If AI exploration is your goal, LangChain is a fantastic starting point.

5 reasons why developers love building with LangChain

LangChain has many benefits, providing a developer-friendly framework that eases the creation of complex AI systems.

Here’s why developers are drawn to LangChain:

1. Ease of use

Simplicity is at the core of LangChain’s design.

It removes the hurdles of working with language models, offering a more accessible route for developers. With its modular structure, you can easily integrate a range of tools, APIs, and LLMs without writing much custom code.

Imagine having a toolkit that manages the difficult tasks, giving you more time to focus on creating your app’s fundamental elements.

2. Flexibility

With LangChain, you have the freedom to explore and adjust to different use cases.

It works seamlessly with OpenAI’s GPT models, Hugging Face offerings, and other custom LLMs. This versatility allows you to customise your application, experiment with various models, and easily switch between them when needed.

3. Powerful agentic AI

Unlike traditional workflows that follow set paths, LangChain’s agents can dynamically select the best tools and processes to tackle problems.

Rather than simply responding to queries, your system will think, adapt, and adjust depending on the situation. LangChain enables the creation of AI systems that are intelligent, adaptable, and independent.

4. Support for external tools

One of LangChain’s most impressive features is its integration capabilities.

It allows you to effortlessly connect with external APIs and data sources, whether that’s pulling live data from Google Search or creating images through Dall-E. LangChain makes it easy to extend the power of your LLMs by linking them with external tools.

5. Open-source and community-driven

LangChain’s open-source model means it’s always evolving, with developers from all corners of the globe contributing to its growth.

This provides you with a continuously expanding library of resources, tutorials, and pre-built integrations. The active community makes it easier to overcome obstacles and stay current with the latest AI trends.

Looking ahead: How LangChain is changing the way we build with AI

As AI adoption accelerates across industries, the conversation is shifting.

It’s no longer about whether to use AI, but how to operationalise it in meaningful, scalable ways.

That’s exactly where the LangChain framework gives an edge.

From customer support and compliance to product recommendations and analytics, LangChain agents bring structure, memory, reasoning, and tool integration into a single, flexible layer. Businesses aren't just automating, they’re building AI systems that can understand, act, and adapt.

And because it’s LangChain open source, the barrier to entry stays low, while the ceiling for innovation stays high.

At GrowthJockey- Startup Accelerator in India, we’ve worked closely with founders, product teams, and operators to build intelligent, LLM-powered systems using tools like LangChain. Whether you’re just starting to explore LangChain in AI or already building in production, our team actively tests, deploys, and scales solutions across real-world environments.

So if you're serious about building with agentic AI, don’t just build with prompts. Build systems.

LangChain FAQs

1. What is the LangChain framework and why use it?

The LangChain framework turns a standalone LLM into an agent that remembers context, calls APIs, queries vector stores, and takes multi-step action. Businesses choose LangChain in AI projects to add reasoning and tool use without writing heavy glue code.

2. Is LangChain open source and what license covers it?

Yes. LangChain open-source code is released under the MIT license, giving developers freedom to modify, self-host, and commercialise their LangChain LLM applications with no vendor lock-in.

3. Which models work best as LangChain LLM back-ends?

LangChain models include GPT-4, Claude, Falcon, and open-weight options such as Llama 2. The framework abstracts prompts, so you can swap engines or run hybrid pipelines without rewriting chains.

4. What are some LangChain examples for building an agent?

Common LangChain examples start with a retrieval-augmented Q&A bot, a document-summarisation tool, or a sales-email generator. Each demo shows how a LangChain agent fetches data, reasons over it, then returns an action-ready response, all in fewer than 50 lines of code.