How Agentic AI Frameworks Are Shaping the Future of Decision-Making

Agentic AI framework components unify perception, reasoning, memory, and action into systems that learn and act autonomously. Comparing AutoGen, LangChain, LangGraph, and LlamaIndex reveals trade-offs in collaboration, modularity, and data grounding to guide your agentic AI framework build. A three-phase deploy roadmap: use-case planning, modular development, and containerized scaling, combined with edge-cloud inference and safety checks defines the agentic AI framework future.

Agentic AI frameworks are the foundation for creating autonomous systems that can perceive their environment, make decisions, and take action with little human input.

Unlike traditional AI, which simply responds to specific instructions, agentic AI systems can pursue goals on their own. They adapt to new situations and continuously learn from their experiences.

These frameworks allow AI to go from passive assistance to active participation in decision-making and workflows and show the power of a well-designed agentic AI architecture that underpins every decision.

Read this blog to learn about agentic AI frameworks, their key components, and how they're changing business operations across industries. Whether you're considering AI solutions or just want to keep up with the latest tech trends, this guide offers essential insights into the agentic AI framework future.

Understanding the agentic AI architecture

The agentic AI architecture framework follows a structured workflow that allows autonomous decision-making and action. This process creates a continuous feedback loop, enabling agents to operate independently while constantly improving their performance.

Check out our guide for a practical playbook on designing and scaling autonomous systems

Here’s a simplified version of how the workflow process usually goes:

-

Input and planning: User queries, environmental data, or goals feeding into strategy formation via goal decomposition and approach selection.

-

Retrieval: Access to internal knowledge, external datasets, or specialised tools supplying necessary context.

-

Tool interaction: Invocation of external systems and specialised functions for real-world engagement.

-

Evaluation: Outcome assessment against objectives, with feedback loops driving continuous improvement.

Pro tip: Sketch your feedback loop on a whiteboard before coding - visualizing input→plan→act→evaluate helps spot missing steps early.

Technical foundations for scalable, high-performance agentic AI

Agents coordinate through lightweight protocols, like leader-election and publish-subscribe channels, to distribute tasks and keep everyone in sync.

To scale, you can break down agent groups by function and spin them up or down automatically with container tools like Kubernetes, letting you handle spikes without downtime.

Pro tip: Instrument key APIs and cache hits in your first sprint, even simple metrics like cache hit-rate can save hours chasing bottlenecks later.

Performance comes from smart caching (Redis or in-memory), batching calls in async pipelines, and using optimised runtimes (ONNX, TensorRT) or GPUs for heavy inference. Bonus: track them via simple monitoring so you can spot trouble fast.

Essential components that power agentic AI frameworks

Successful autonomous systems require multiple specialised components working in harmony. Traditional AI operates with single-purpose modules, but agentic frameworks integrate these core elements to enable true autonomy and intelligent decision-making.

| Component | Role |

|---|---|

| Perception Module | Collects and processes data from various sources, transforming inputs into structured information for decision-making |

| Reasoning Engine | Analyses information, generates plans, and makes decisions based on goals and available data |

| Memory System | Stores experiences and learned patterns, enabling agents to recall relevant information and build upon past interactions |

| Action Framework | Translates decisions into concrete actions through API calls, tool use, or system interactions |

| Learning Mechanism | Enables continuous improvement by analysing outcomes and refining future behaviour based on experience |

| Safety Controls | Implements guardrails and validation mechanisms to ensure actions align with intended goals and ethical guidelines |

Each agentic AI framework component plays an important part in how the framework works. Their combined abilities create the sophisticated autonomous behaviour seen in true agentic systems.

Comparing 4 agentic AI frameworks

Agentic AI frameworks come in different approaches, each with its own strengths and design philosophies. A quick agentic AI framework comparison reveals which design philosophies best suit your use case.

1. AutoGen

AutoGen is Microsoft’s open-source multi-agent conversation framework that lets agents chat naturally. Basically, it transforms isolated LLM calls into a cooperative AI team.

Agents negotiate plans, call tools, or run code, and merge results without human oversight. This multi-agent chatter brings in faster, more creative solutions, like a squad of experts hashing out problems in parallel.

2. LangChain

LangChain is a modular toolkit for LLM apps that standardises interfaces to models, databases, and tools. It stitches language models, databases, and functions into a unified pipeline.

Its plug-and-play modules let you swap components so easily that you can build custom decision workflows in hours.

3. LangGraph

Built on LangChain, LangGraph introduces graph-based workflows and state management to visual decision maps. Each node holds state, and each edge tracks context, making complex, branching logic transparent, debuggable, and reliably reproducible.

4. LlamaIndex

Formerly GPT Index, LlamaIndex is a data-centric framework[1] for grounding LLMs via Retrieval-Augmented Generation.

LlamaIndex closes the gap between general LLM knowledge and your data by indexing documents, APIs, or databases into a retrieval layer. Agents then ground every output in fact, slashing hallucinations and boosting trust.

How to build and deploy your agentic AI framework

Follow a clear, three-phase process to translate your vision into a working framework. You’ll first identify and plan high-impact use cases, then iterate through modular development and testing, and finally deploy at scale with robust monitoring and continuous optimisation.

Streamline your development and testing phases with insights from GrowthJockey

Phase 1: Use case identification and planning

Begin by defining the precise business challenge your autonomous system will address. Develop detailed user stories that describe what the system will perceive, decide and execute, whether that’s industrial robots optimising logistics or software agents automating customer triage.

Establish success metrics up front (e.g., throughput targets, error tolerances, response times) so every milestone is measurable.

Prioritise use cases based on strategic impact and feasibility, and create a lean plan that avoids unnecessary features while making sure you meet all organisational goals.

Phase 2: Development methodology and testing

Adopt an iterative, modular methodology to reduce risk and accelerate feedback. Start with a minimal viable capability, such as sensor integration or API orchestration, and validate it through comprehensive unit tests.

Pro tip: Build each functional block as an interchangeable module, allowing you to swap algorithms or components as requirements evolve.

Automate your test suite to include unit tests for core logic, integration tests for external interfaces and simulation-based validation for real-world scenarios.

Get tips on building venture with AI-powered tech

Then, incorporate resilience testing by simulating network latency or service failures to verify graceful degradation and recovery.

Phase 3: Deployment and scaling strategies

-

Containerise each microservice or agent component using Docker, then orchestrate across your infrastructure with Kubernetes or equivalent platforms.

-

Set up rules that add or remove resources automatically based on live data (like CPU load, queue lengths, or your own key metrics) so the system always has just the right capacity.

-

Roll out new features gradually with canary releases and feature flags, limiting them to a small user group first. This lowers risk and lets you quickly turn things off if something goes wrong.

Pro Tip: Manage your Kubernetes manifests with GitOps (e.g., Argo CD). That way, any drift between Git and cluster is immediately obvious.

Finally, use monitoring tools like Prometheus, Grafana or the ELK stack to track system health, performance trends and errors in real time.

Following this roadmap means your autonomous system will deploy reliably, scale when needed and keep improving over time.

Building production-ready agentic systems

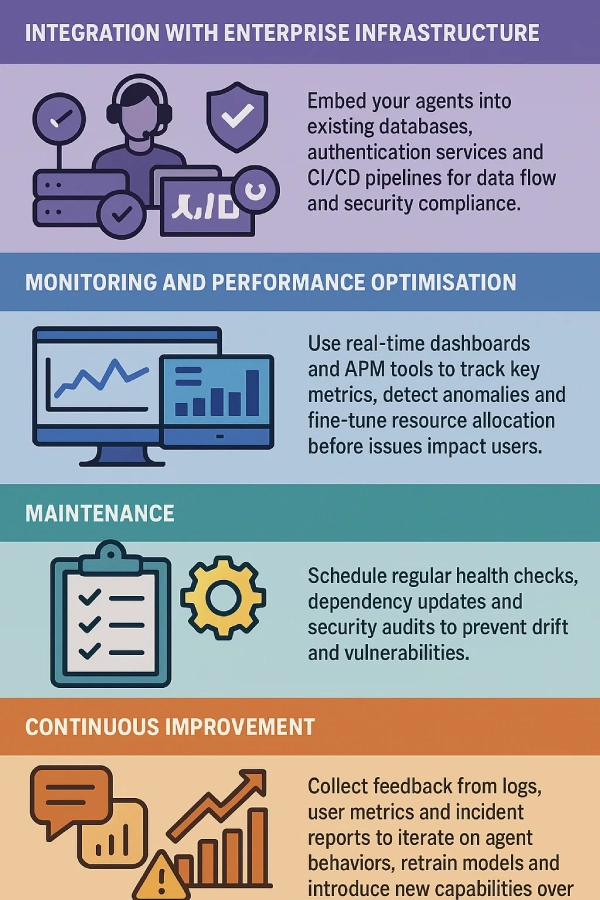

Putting your AI agents into production doesn’t just keep them running, it leads to faster, smarter decisions. By hooking them into your systems, watching their performance, and improving them over time, they deliver accurate insights, adapt to change, and see better results:

-

Integration with enterprise infrastructure: Embed your agents into existing databases, authentication services and CI/CD pipelines for data flow and security compliance.

-

Monitoring and performance optimisation: USe real-time dashboards and APM tools to track key metrics, detect anomalies and fine-tune resource allocation before issues impact users.

-

Maintenance: Schedule regular health checks, dependency updates and security audits to prevent drift and vulnerabilities.

-

Continuous improvement: Collect feedback from logs, user metrics and incident reports to iterate on agent behaviors, retrain models and introduce new capabilities over time.

Enterprise governance and safety frameworks

As AI agents take on more decision-making, strong governance and safety measures need more focus. These frameworks make sure that automated decisions are trustworthy, transparent and within your organisation’s goals.

This changes how AI guides strategy and operations reliably in the future.

As AI agents shape tomorrow’s decisions, simple guardrails keep them reliable and fair[2]: include approval steps and real-time alerts for high-impact actions so experts can review or pause decisions.

Run quick audits and fairness tests before rollout to catch bias and uphold your values; and embed audit logs and privacy controls (GDPR, HIPAA, SOX) to make sure every AI-driven choice meets legal standards.

Pro Tip: Use a simple “bias dashboard” (even a shared spreadsheet) to track model fairness metrics over time, no need to build a custom UI at first.

Top real world applications of agentic AI frameworks

People are practically implementing AI frameworks, transforming operations across many industries and having a significant impact. Two standout agentic AI framework applications today are:

1. Intelligent financial analysis and decision support

Financial institutions are turning to agentic AI framework to build intelligent systems. These systems autonomously analyse market conditions, evaluate investment opportunities, and optimise portfolios.

These agents work around the clock, tracking market indicators and economic trends to swiftly identify potential risks and opportunities.

2. Supply chain optimisation and logistics management

Modern systems go beyond basic inventory management, offering more advanced features like route optimisation, demand forecasting, and disruption management.

By considering agentic AI frameworks factors like weather, transport costs, and regional demand, they make better decisions and handle competing priorities more effectively than traditional methods.

Agentic AI framework future

Over the next few years[3], agentic AI will begin integrating real-time sensor fusion combining IoT, vision and LiDAR streams directly into decision loops. This will give systems live situational awareness.

Read more on AI breakthroughs that are enhancing business performances

At the same time, lightweight formal verification (e.g., TLA+ model checks or runtime monitors) will enforce safety constraints before critical actions, ensuring predictable behaviour in high-stakes settings.

Finally, hybrid edge-cloud setups handle quick decisions on local devices (sub-100 ms) and shift heavy workloads to the cloud, giving both speed and scale.

These advances will help AI agent frameworks interact better with the physical world and process information at incredible scales. This opens up new possibilities for autonomous operation in many areas.

Go on and begin shaping the future of decision-making

In closing, an agentic AI framework unites sensing, reasoning and action into a self-improving decision engine.

By choosing the right agentic AI architecture and using proven frameworks deploy strategies, like hybrid edge-cloud inference and built-in safety checks, you’ll find faster insights, reduced risk and measurable ROI.

If you’re ready to future-proof your operations, begin your agentic AI framework build with a high-impact pilot, then refine your AI framework deploy strategy as you scale.

At GrowthJockey, we provide AI solutions tailored to your business needs. We guide you through the agentic AI framework deployment and ensure they integrate smoothly with your operations. We work with you to find the best framework for your business.

Contact us today to see how we can help you use agentic AI

FAQs on agentic AI frameworks

1. What is an agentic framework in AI?

An agentic framework in AI is like a blueprint that allows AI to work on its own with a clear purpose. It gives the structure and design patterns needed for AI to see what's happening around it, think through information, make decisions, and take action by itself.

2. What is the best agentic AI?

There is no one-size-fits-all "best" agentic AI framework. The right choice depends on the specific needs of the use case, along with technical and organisational factors.

3. What is an example of agentic AI?

A prominent agentic AI example is a financial management system that autonomously monitors market conditions, evaluates investment opportunities, and adjusts portfolio allocations to optimise returns while managing risk.

This system monitors real-time market data, analyses economic trends and company performance, and executes trades based on preset strategies. Unlike basic automation tools, it can adapt to changes in the market, learn from past actions, and make complex decisions based on multiple factors.

4. Is ChatGPT an agentic AI?

ChatGPT is not a true agentic AI because it doesn't act autonomously. It processes information and gives responses but mainly reacts to prompts instead of pursuing goals or taking actions on its own.